Welcome

Hello!

Thank you for visiting my site! My name is Sarah Khan and I am an MSCS grad student at Georgia Tech, specializing in Computational Perception and Robotics. I’m interested in robotic deployment in home environments for assistive tasks and assistive technology in general.

Academics

I recently completed some brief research projects in knowledge distillation and gesture recognition for mobile robotics.

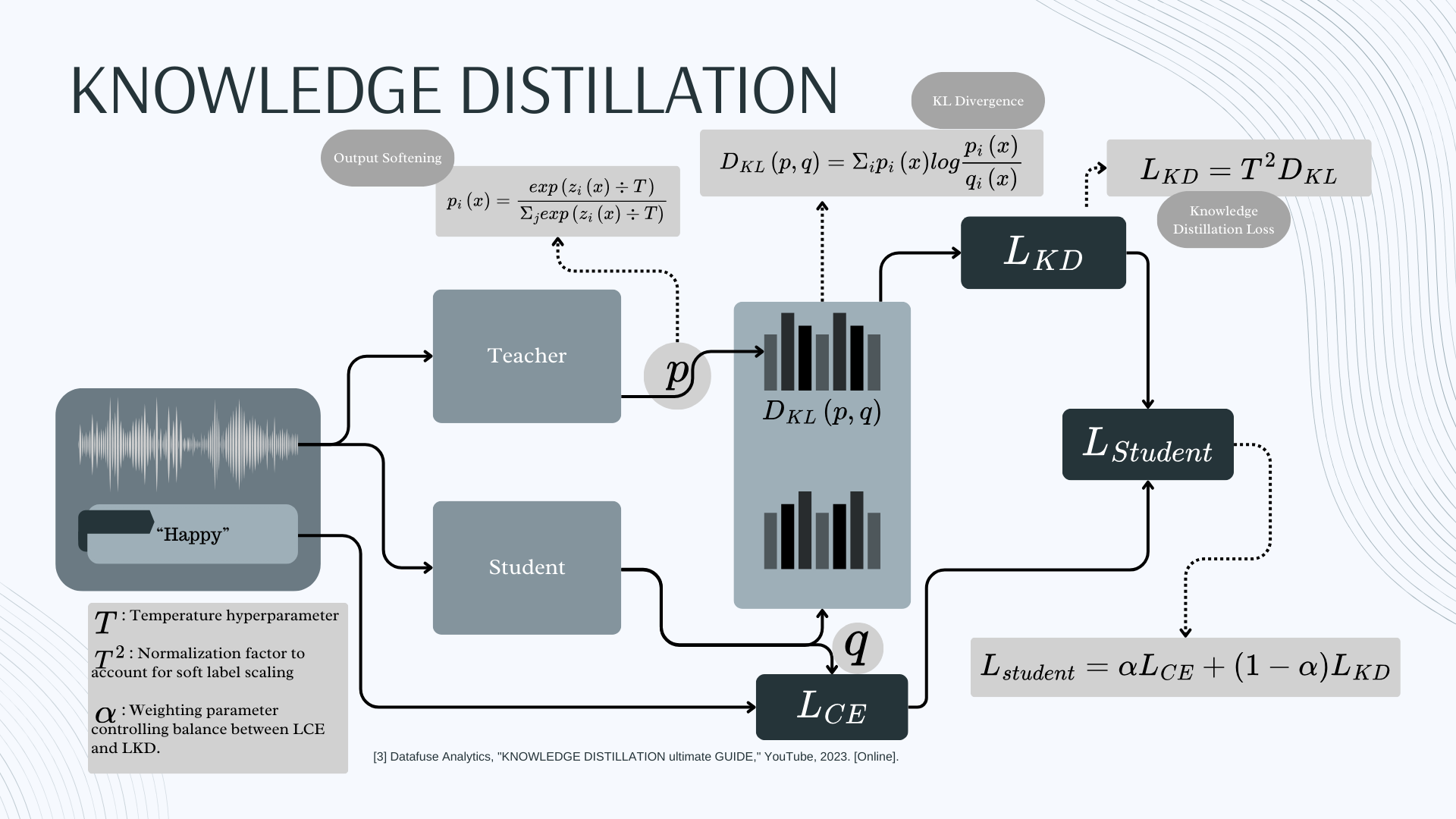

Knowledge Distillation

The knowledge distillation project involved the use of Wav2Vec2-base (95M parameters) as a teacher model finetuned on the TESS dataset for a Speech Emotion Recognition (SER) categorization task. We used student models Wav2Small[1] (90K parameters) and Wav2Tiny (15K parameters) to evaluate the distllation process. Student learning was established using a combined student Cross Entropy loss and teacher loss using KL Divergence.

- A. Baevski, H. Zhou, A. Mohamed, and M. Auli, “wav2vec 2.0: A framework for self supervised learning of speech representations,” in Proceedings of the 34th Conference on Neural Information Processing Systems (NeurIPS 2020), Vancouver, Canada, 2020. 1

UDA for Personalized Action Recognition in Mobile Robotics

We adapted the MMPOSE/RTMO ML model’s gesture recognition and categorization to a unique set of gestures in the Anki Vector’s visual domain using unsupervised domain adaptation (UDA). We collected domain data using the Vector mobile robot and defined unique action response sequences to the new set of gestures.

These and additional projects related to graduate research and academic involvement can be seen here in the Academics section:

Knowledge Distillation for TinyML/Embedded AI: Model Distillation with Time Series Data

Knowledge distillation research project using Wav2Vec2-base and time series data. Continue reading Knowledge Distillation for TinyML/Embedded AI: Model Distillation with Time Series Data

Unsupervised Domain Adaptation for Personalized Action Recognition in Body Gesture Control for Mobile Robotics

Mobile robot gesture recognition using Unsupervised Domain Adaptation. Continue reading Unsupervised Domain Adaptation for Personalized Action Recognition in Body Gesture Control for Mobile Robotics

See Academics for more

Projects

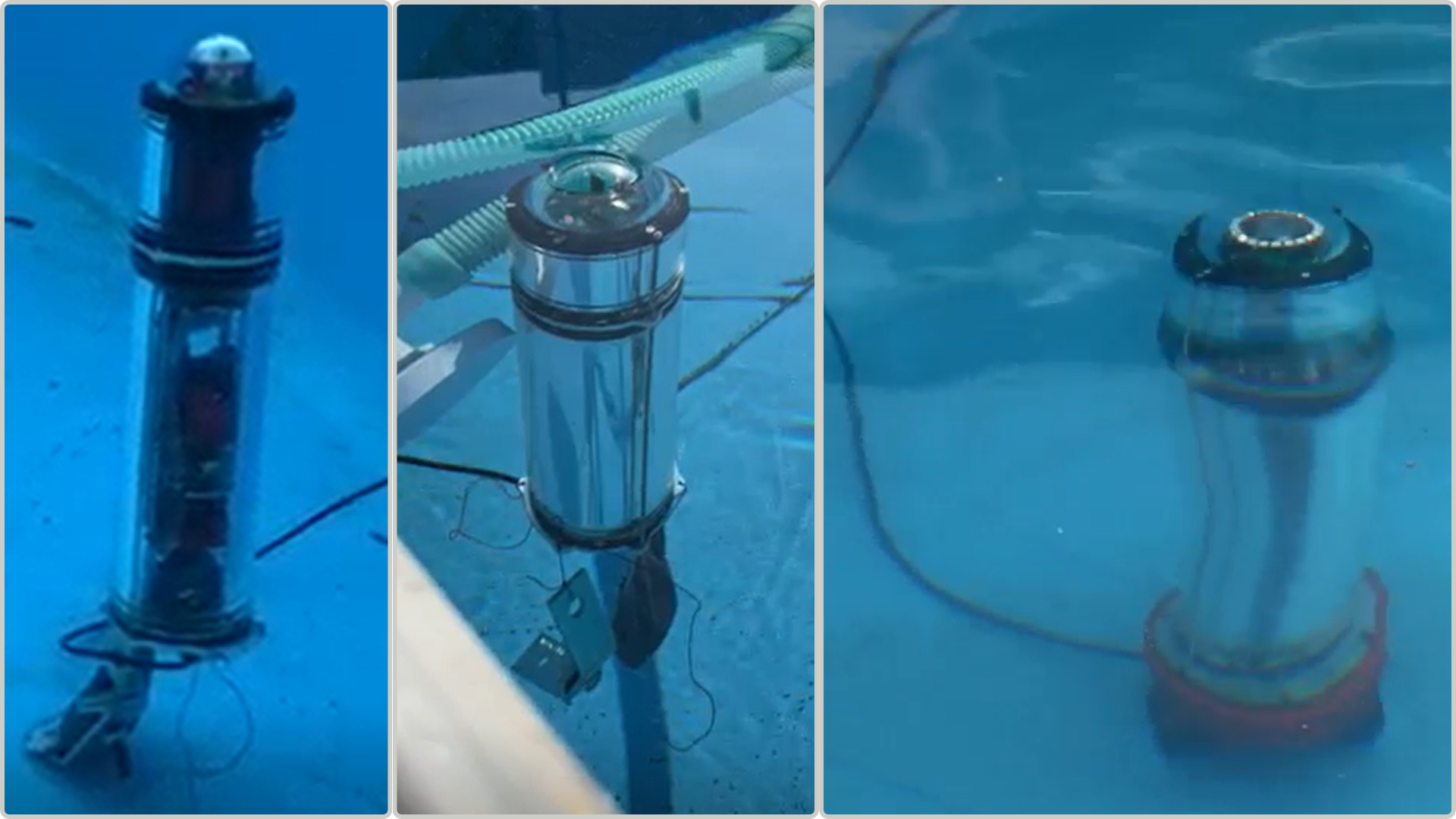

Floatation Device Embedded Software Development

I was the Lead Software Developer of the Vikings underwater robotics team in Long Beach, CA and developed the floatation device embedded software and remote interface. The device is controlled remotely via bluetooth and conducts a complete underwater vertical profile with an internal state machine, collecting sensor pressure, temperature, and depth data. Upon resurfacing and command reception, it wirelessly transmits the data to the control center, which then automatically graphs the received data.

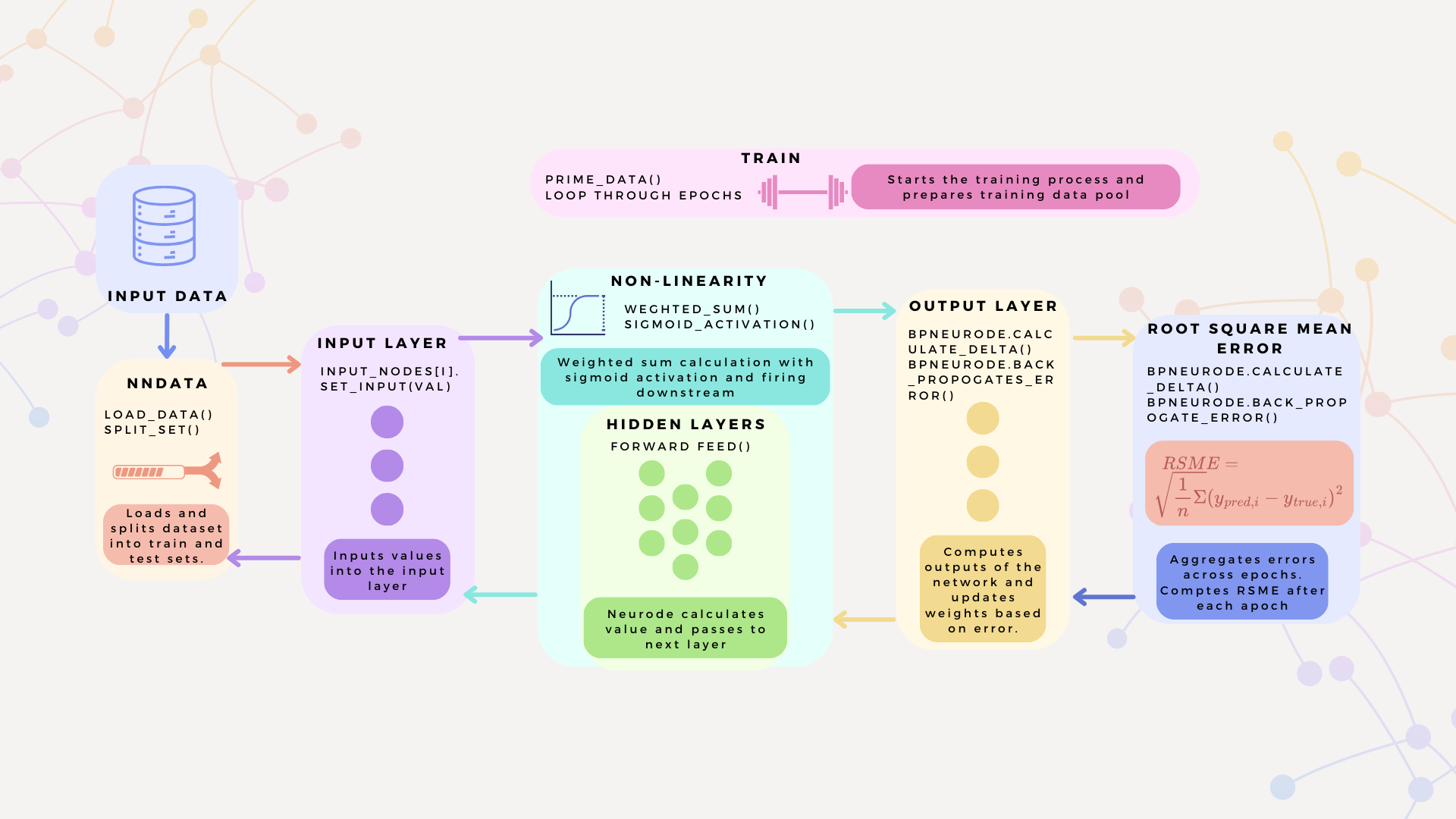

Forward Feeding Back Propagating Neural Network

I’ve additionally developed a Forward Feeding Back Propagating Neural Network in Python. The forward feed incorporates a sigmoid activation function and the loss is calculated using the Root Mean Squared Error (RMSE) metric.

These projects can be viewed in more detail here in the Projects section:

Floater

Floatation device performing automated vertical profiles with temperature, depth, and pressure data collection and transmission. Continue reading Floater

Forward Feeding Backpropagating Neural Network

Forward Feeding Back Propogation Neural Network architecture developed in Python Continue reading Forward Feeding Backpropagating Neural Network

See Projects for more